The Threads of CUDA

By Storybird

17 Jul, 2023

CUDA programming is a model that allows the use of NVIDIA graphics processing units (GPUs) to perform general purpose computing. CUDA stands for Compute Unified Device Architecture and is a parallel computing platform and programming model developed by NVIDIA.

When it comes to CUDA programming, two fundamental concepts that come into play are blocks and threads. These are the keys to understand how CUDA executes codes on the GPU.

A thread in CUDA is the smallest unit of execution. Each CUDA thread has its own set of registers, which it uses for calculations and storing local variables. Threads are independent and can execute their instructions without needing to communicate with other threads.

Different threads can execute different pieces of a kernel (a function that runs on the GPU) at the same time. By dividing a problem into smaller tasks, and assigning each task to a thread, the GPU can process these tasks simultaneously. This is the essence of parallel computing.

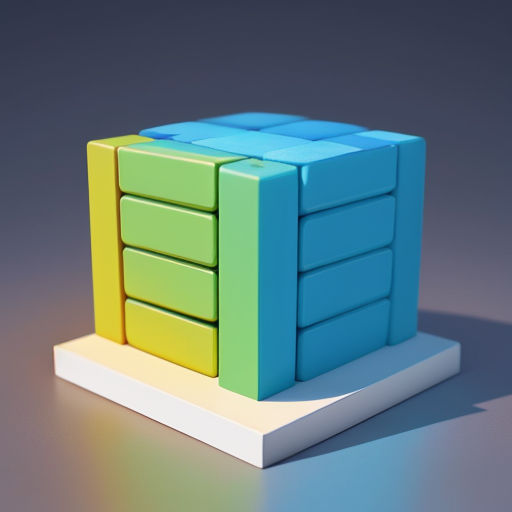

However, threads aren't just tossed randomly onto the GPU. They're organized into a hierarchy, and at the top of this hierarchy is the concept of a block. A block is a group of threads that can cooperate with each other by sharing data through a fast shared memory.

Within each block, threads can synchronize their execution to coordinate memory accesses, ensuring that expected data is available at the right time. However, there's no built-in mechanism for synchronization between different blocks.

Each thread within a block is uniquely identified by a thread ID. Likewise, each block within a grid is uniquely identified by a block ID. These IDs can be multi-dimensional, giving developers greater flexibility in how they organize data.

A key feature of CUDA programming is the ability to adjust the block size and number of threads per block to optimize resource usage. This allows developers to extract the maximum performance from a GPU, by balancing workload and minimizing idle resources.

Choosing the right block size and number of threads per block is crucial. Ideally, the number of threads per block should be a multiple of the warp size, which is a hardware-defined number of threads that the GPU can process simultaneously.

The maximum number of threads per block is determined by the GPU architecture. For instance, on many modern GPUs, a block can have up to 1024 threads. However, the number of blocks is largely only limited by the total number of threads that the GPU supports.

The number of threads per block and the number of blocks are both specified when launching a CUDA kernel. This is done using the <<<...>>> syntax. The block and thread configuration is one of the first arguments passed to the kernel.

It's worth noting that while threads within a block can cooperate and synchronize, blocks within a grid are essentially independent. This means they can't share data directly or synchronize their execution.

To get around this, developers sometimes have to use techniques like global synchronization or atomics, which can add complexity to the code. However, these techniques allow for coordination between blocks when it’s absolutely necessary.

It is also important to remember that while CUDA allows for the utilization of massive parallel processing power of GPUs, careful consideration should be given to memory access patterns. Optimizing these patterns can significantly boost the performance of your code.

In CUDA programming, maximizing the number of active threads and optimizing the memory access pattern by each thread are the keys to extracting maximum parallelism and performance from the GPU.

Choosing an appropriate grid and block size configuration can be challenging. It is highly problem-dependent, and what works well for one problem might not work for another. Therefore, it’s often necessary to experiment with different configurations.

CUDA has a number of built-in functions that can help with this. For example, the 'cudaOccupancyMaxPotentialBlockSize' function can be used to calculate the optimal block size and number of blocks for a particular kernel.

CUDA's model of threads and blocks can be a little daunting at first, especially if you're coming from a traditional sequential programming background. But once you start thinking in terms of parallelism, it becomes a powerful tool for solving complex problems.

It's not just for graphics or scientific computing either. CUDA has been used in a wide range of applications, from artificial intelligence and deep learning, to big data analytics and even cryptocurrency mining.

CUDA's block and thread model is unique and provides flexibility that is not found in many other parallel programming models. Understanding how to use this flexibility can be the difference between an efficient GPU program and an inefficient one.

NVIDIA's CUDA programming model continues to evolve, with new features and improvements being added regularly. This, along with the increasing ubiquity of GPUs in computing, makes understanding CUDA's block and thread model essential for any modern programmer.

CUDA programming is an exciting and challenging field. Encoding parallelism into software requires a fresh perspective and good understanding of concepts such as blocks and threads. But once these are grasped, it can lead to significant performance improvement.

A well-optimized CUDA program can achieve speedups that are orders of magnitude greater than a comparable CPU program. This is due to the massively parallel architecture of GPUs, and the efficient use of threads and blocks in CUDA.

The core of CUDA programming, though, is the GPU itself. GPUs are designed for parallel processing. They excel at performing the same operation on large chunks of data simultaneously, which is exactly what CUDA's blocks and threads allow them to do.

Ultimately, CUDA programming, with its blocks and threads, allows us to solve complex, computationally intensive problems efficiently. Whether it's simulating the formation of galaxies or accelerating machine learning algorithms, CUDA is a powerful tool in a programmer's toolkit.

So, in a multiscore GPU, every core can execute multiple blocks. Hence, a program is organized as a grid of thread blocks. These blocks of threads are then divided into smaller units called warps for execution.

Threads share data through shared memory, communicate, and synchronize their execution within a block but not beyond. That’s why in CUDA, there is a barrier within blocks but not beyond blocks.

Thus, CUDA’s block/thread hierarchy and thread scalability make it possible to write general purpose parallel code for a wide variety of problems. Probably, the most important thing to remember is that CUDA is all about parallelism and performance.

With this programming model, developers can write code that is executed on the NVIDIA GPUs. The primary benefit of using CUDA is that it allows for direct access to the virtual instruction set and memory of the parallel computational units in CUDA GPUs.

Hence, the CUDA programming model provides data parallelism by distributing the tasks across a number of threads. These threads are further grouped into blocks and the blocks are organized into a grid.

Now, the block of threads can be executed independently on any multiprocessor, but they are required to fit into the multiprocessor. The multiprocessor executes threads in groups of 32 called warps.

Lastly, it’s also important to note that the CUDA programming model assumes that the CUDA kernels execute with a grid of thread blocks that are a lot more numerous than the number of multiprocessors.

To conclude, CUDA programming requires understanding of the concepts such as blocks and threads, which are key in allocating tasks and executing functions on the GPU. Mastery of these concepts is crucial for optimizing the performance of CUDA programs.

By distributing tasks among multiple threads within blocks, and executing these blocks across the grid, CUDA programmers can leverage the power of parallel computing to solve complex problems with remarkable efficiency.

Hence, CUDA programming, with its focus on parallelism and high performance, has emerged as an essential tool for programmers tackling computationally intensive tasks. Its concepts of blocks and threads form the fundamental building blocks of this transformative technology.